Analysis of Algorithms

- “Analysis of Algorithms” is concerned primarily with determining the memory (space) and time requirements (complexity) of an algorithm.

- The time complexity (or simply, complexity) of an algorithm is measured as a function of the problem size.

Some examples are given below.

- The complexity of an algorithm to sort n elements may be given as a function of n.

- The complexity of an algorithm to multiply an m*n matrix and an n*p matrix may be given as a function of m , n , and p.

How efficient is an algorithm or piece of code depends on a lots of resources, including:

- CPU (time) usage

- memory usage

- disk usage

- network usage

All are important but we will mostly talk about time complexity (CPU usage).

when analyzing an algorithm, one might find that the time (or the number of steps) it takes to complete a problem of size n

Units for measuring an algorithm’s running time

Identify the most important operation of the algorithm, called the basic operation , the operation contributing the most to the total running time, and compute the number of times the basic operation is executed.

- Assigning a value to a variable

- Calling a method

- Performing an arithmetic operation (for example, adding two numbers)

- Comparing two numbers

- Indexing into an array

- Following an object reference

- Returning from a method.

The time required by a function/procedure is proportional to the number of “basic operations” that it performs. Here are some examples of basic operations:

- one arithmetic operation (e.g., +, *).

- one assignment (e.g. x = 0)

- one test (e.g., x == 0)

- one read (of a primitive type: integer, float, character, boolean)

- one write (of a primitive type: integer, float, character, boolean)

Worst-Case, Best-Case, and Average-Case Efficiencies

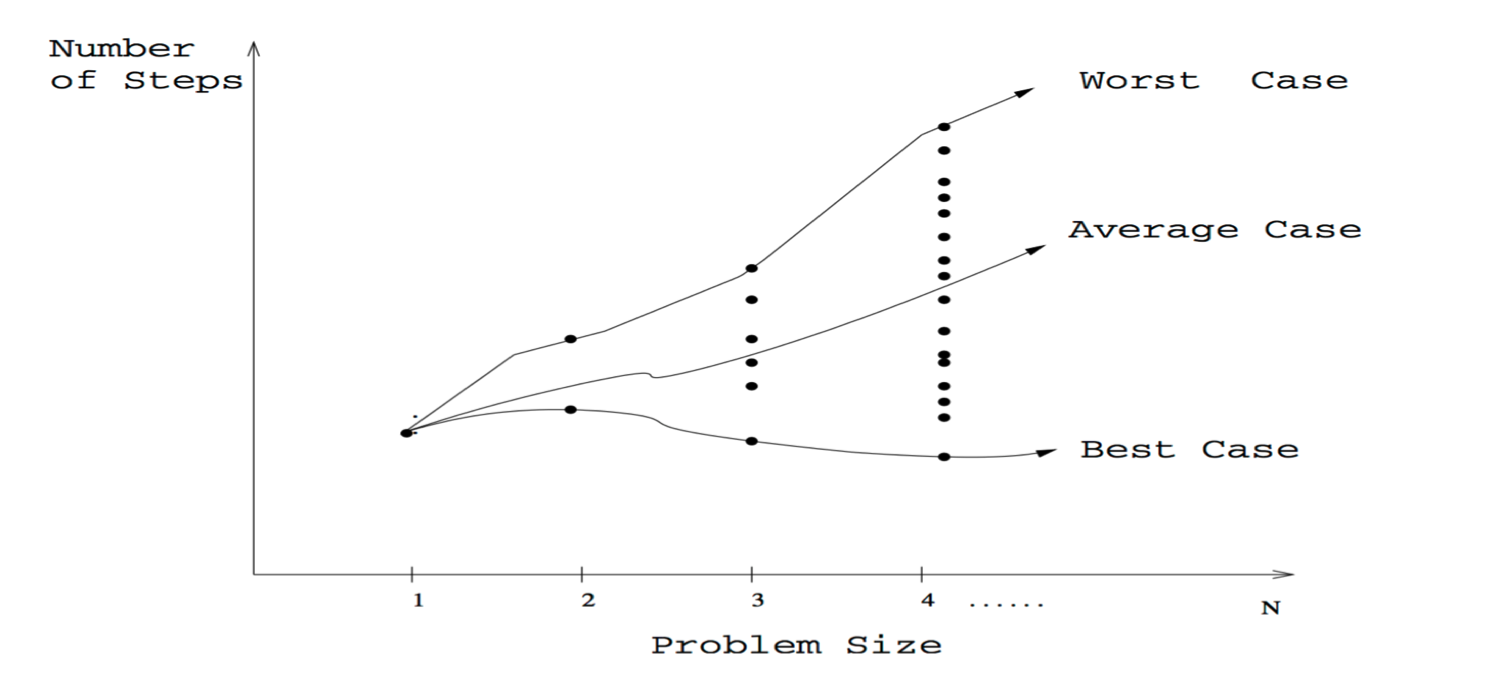

there are many algorithms for which running time depends not only on an input size but also on the specifics of a particular input.

- The worst-case complexity of the algorithm is the function defined by the maximum number of steps taken in any instance of size n. This represents the curve passing through the highest point in each column.

- The best-case complexity of the algorithm is the function defined by the minimum number of steps taken in any instance of size n. This represents the curve passing through the lowest point of each column.

- The average-case complexity of the algorithm, with is the function defined by the average number of steps over all instances of size n.

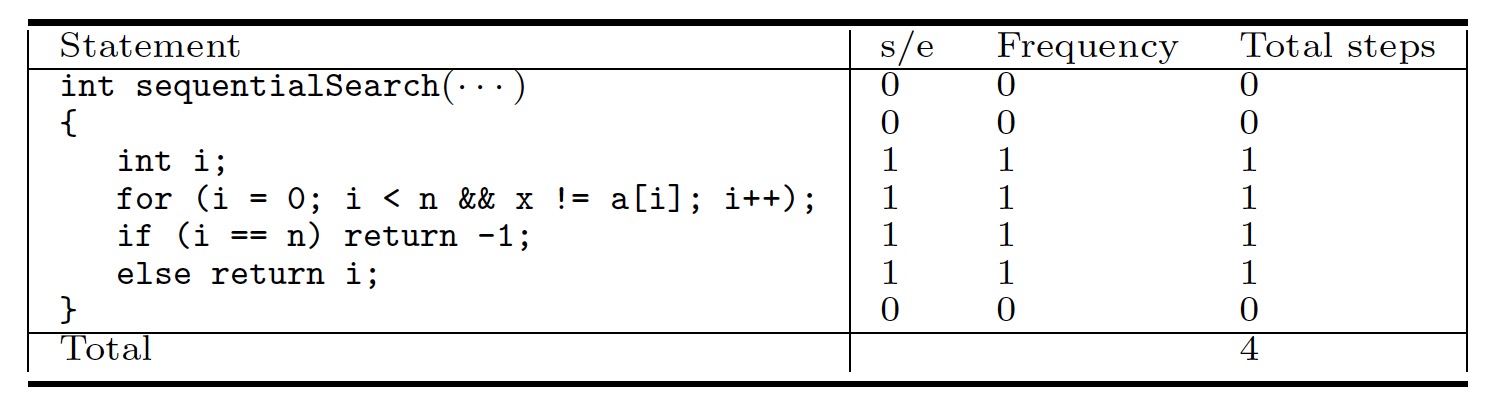

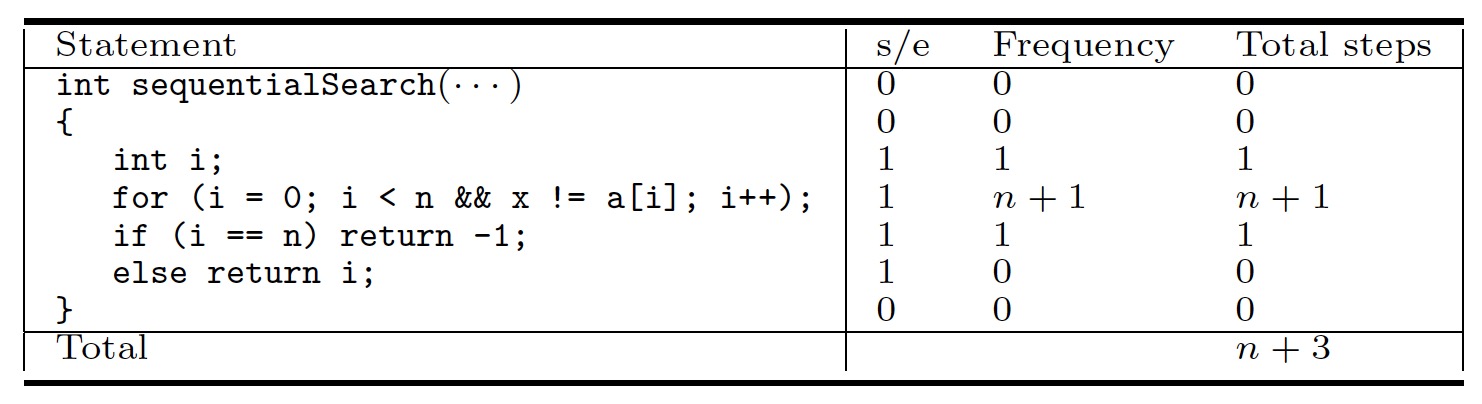

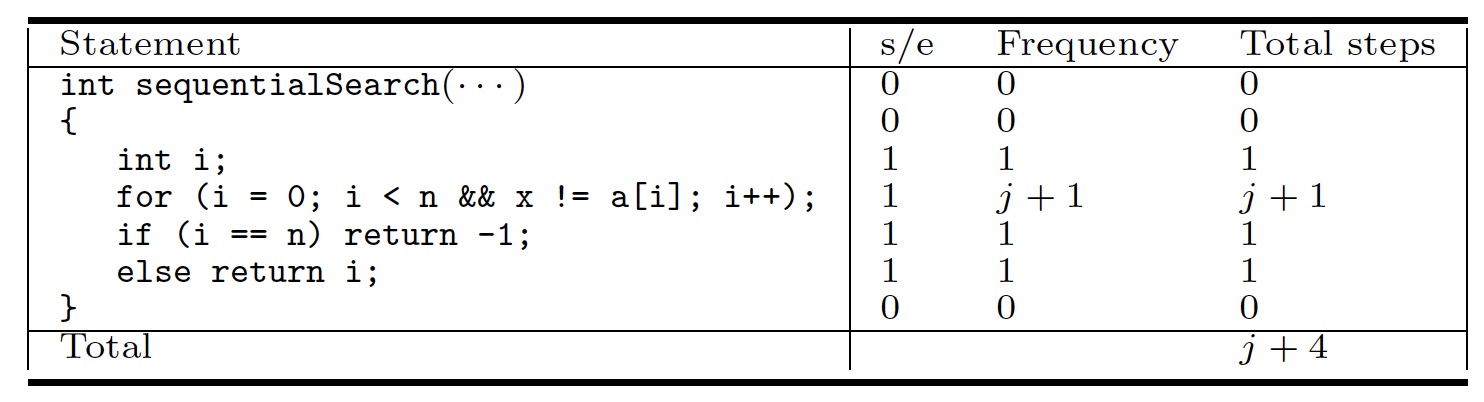

Consider, as an example, sequential search.

public int sequentialSearch(int[] a, int x) {

int n = a.length;

int i;

for (i = 0; i < n && x != a[i]; i++);

if(i == n) return -1;

return i;

}

Best Case

Worst Case

Average Case

Clearly, the worst-case analysis provides very important information about an algorithm’s efficiency by bounding its running time from above.

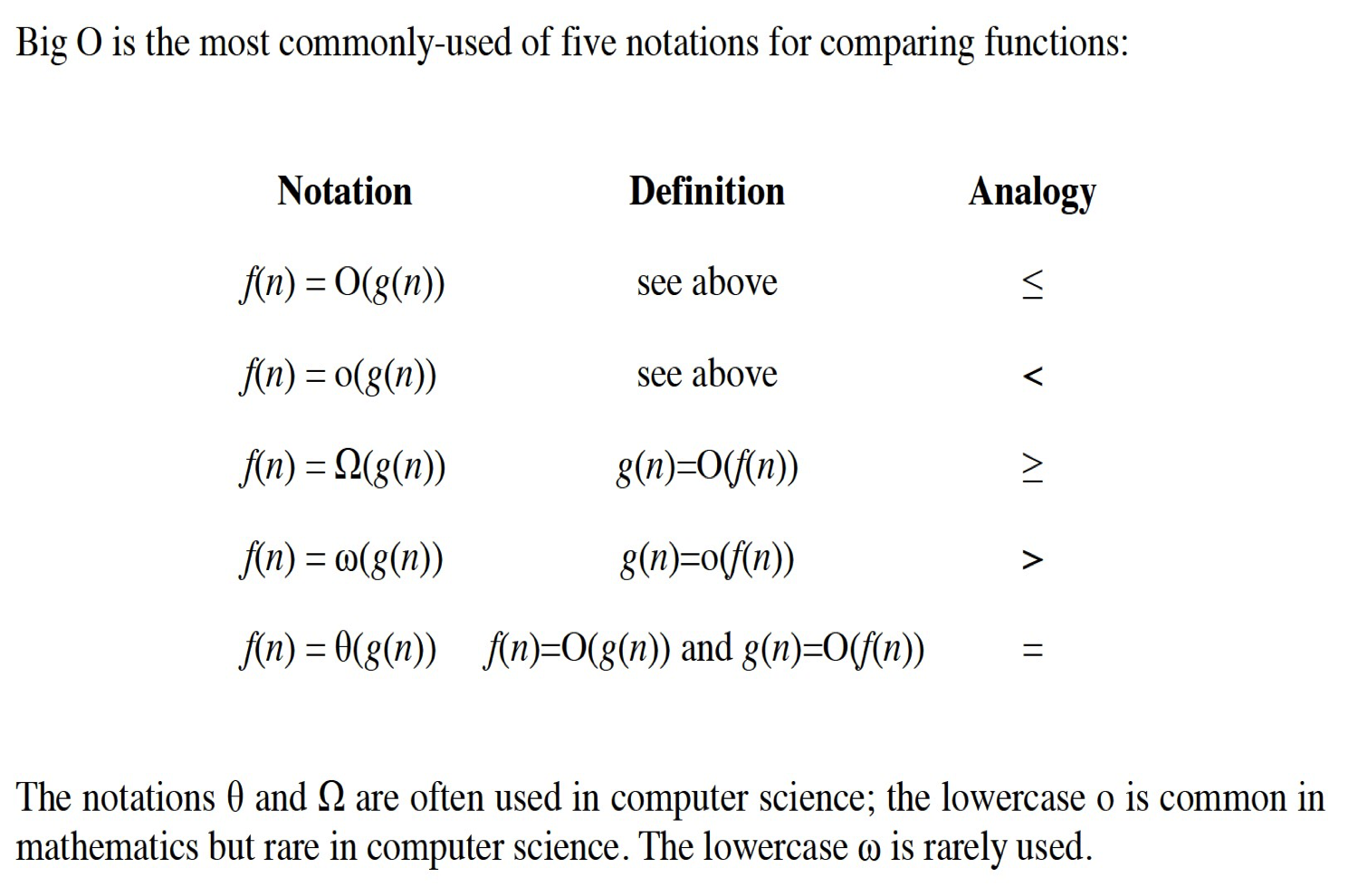

The important thing to realize is that each of these time complexities define a numerical function, representing time versus problem size. Time complexities are complicated functions and we need to simplify that to work with them For this, we need the “Big Oh” notation.

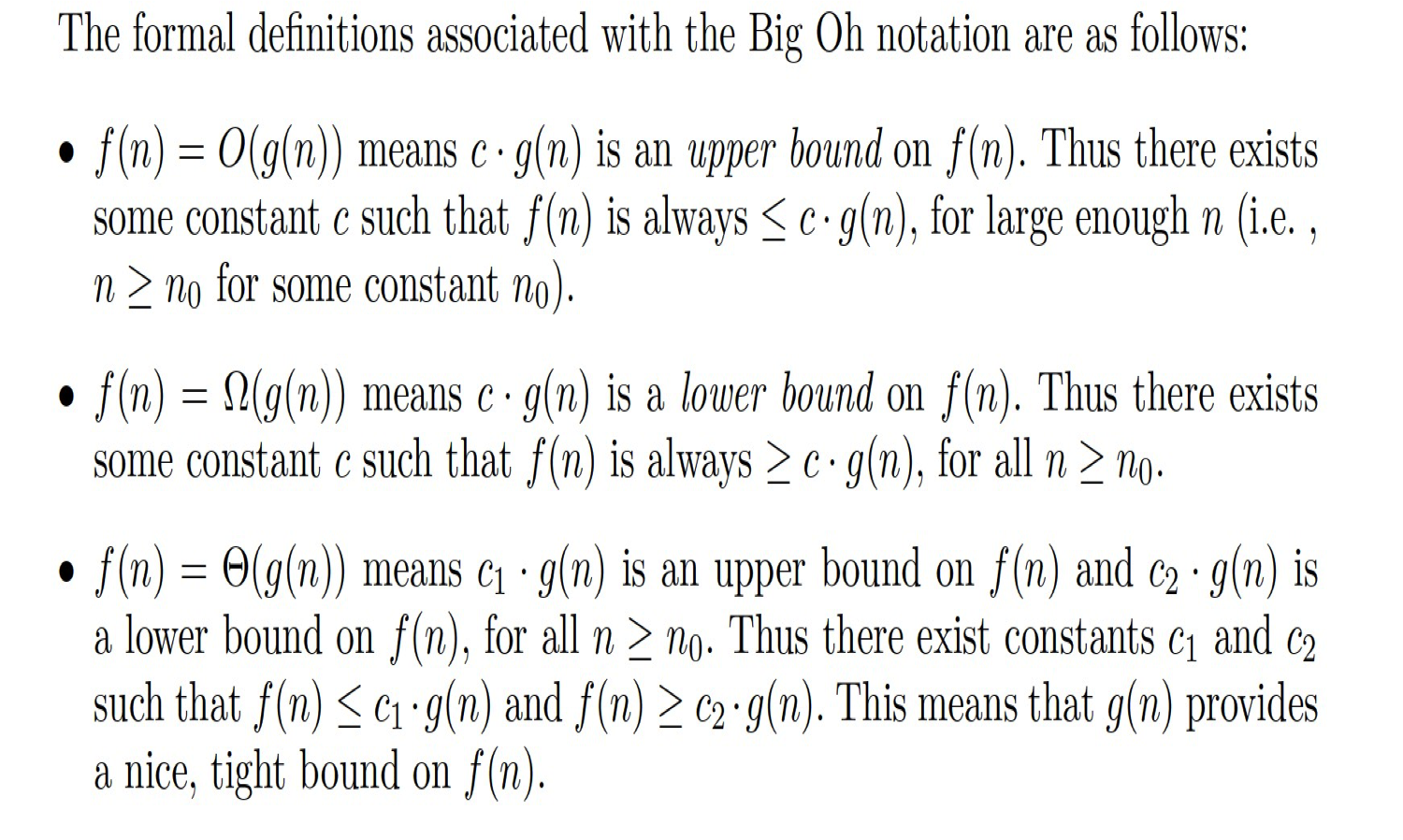

The Big Oh Notation

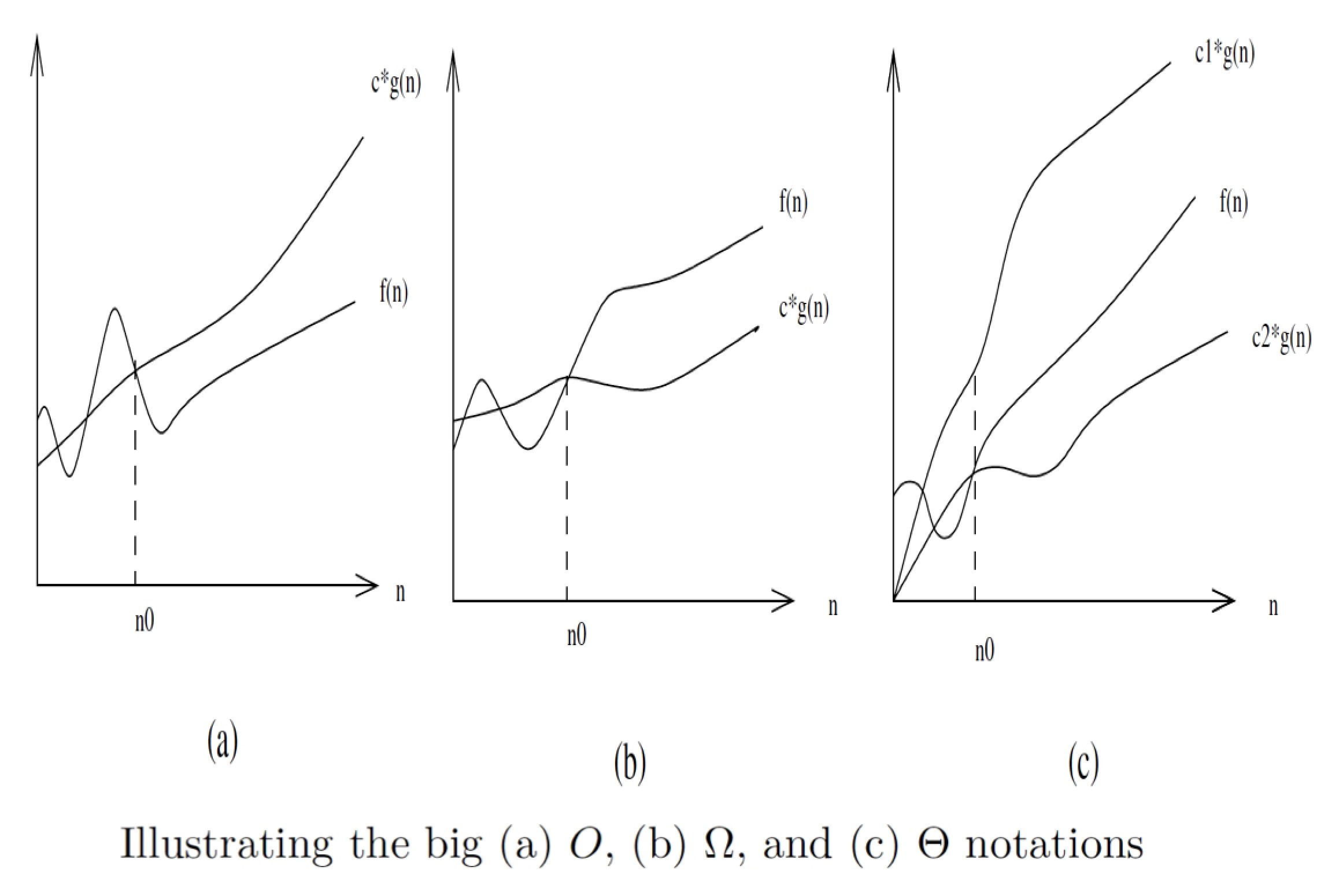

when analyzing some algorithm, one might find that the time (or the number of steps)it takes to complete a problem of size n is given by T(n)=4n2 -2n+2. If we ignore constants (which makes sense because those depend on the particular hardware the program is run on) and slower growing terms, we could say “T(n) grows at the order of n ” and write: T(n) = O(n2).

-

- It proves to be much easier to talk in terms of simple upper and lower bounds of time-complexity functions using the Big Oh notation. The Big Oh simplifies our analysis by ignoring levels of detail that do not impact our comparison of algorithms.

- The Big Oh notation ignores the difference between multiplicative constants. The functions f(n) = 2n and g(n) = n are identical in Big Oh analysis.

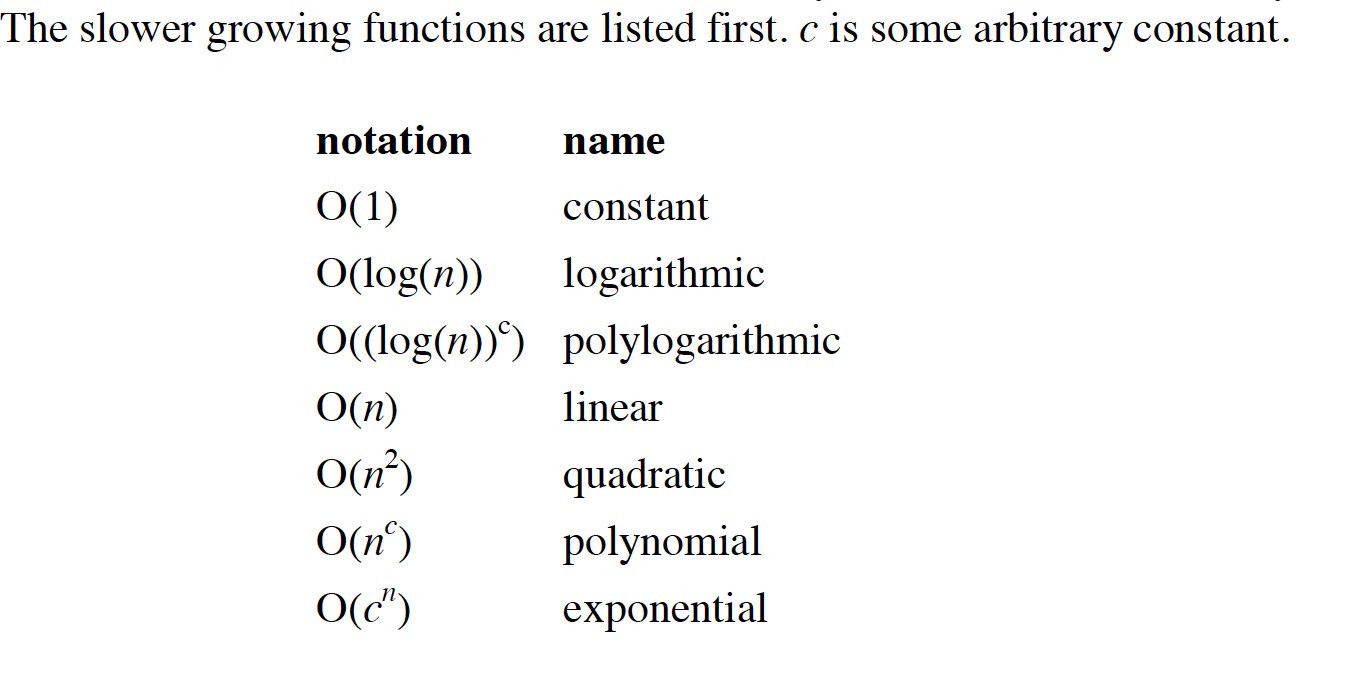

For a problem of size N:

-

-

- a constant-time algorithm is “order 1” : O(1)

- a linear-time algorithm is “order N” : O(N)

- a quadratic-time algorithm is “order N squared” : O(N2)

-

Note that the big-O expressions do not have constants or low-order terms. This is because, when N gets large enough, constants and low-order terms don’t matter

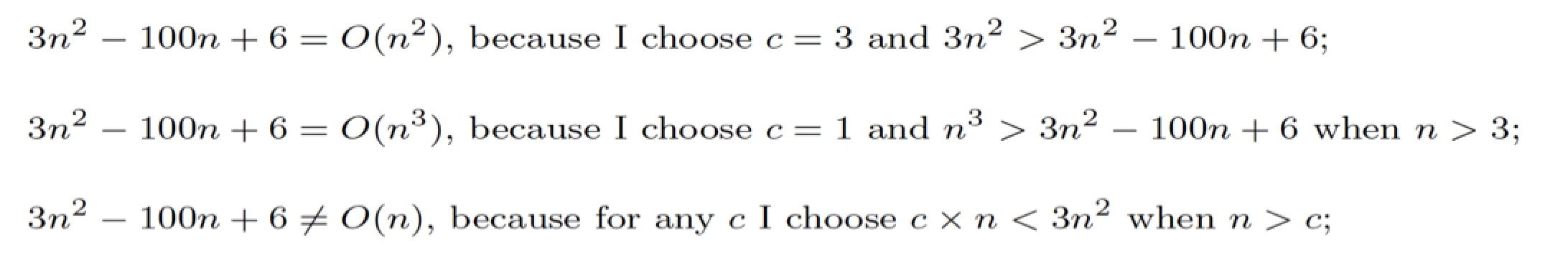

Example Big-O calculation

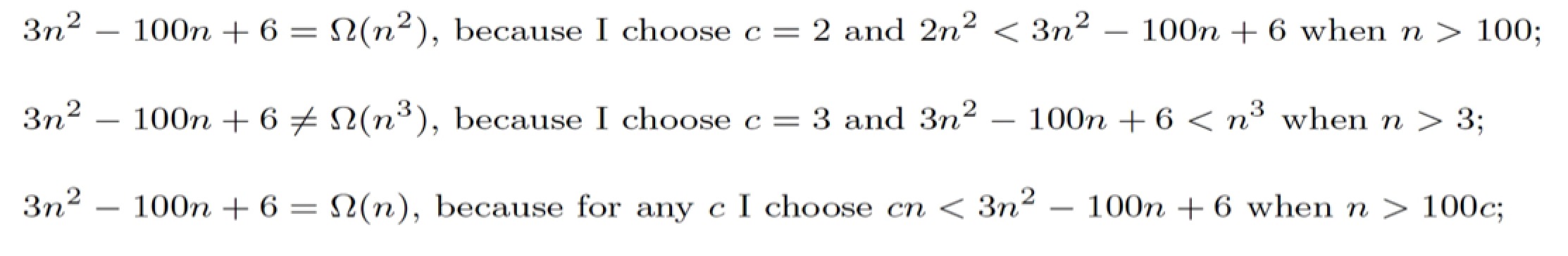

Example Big-Omega calculation

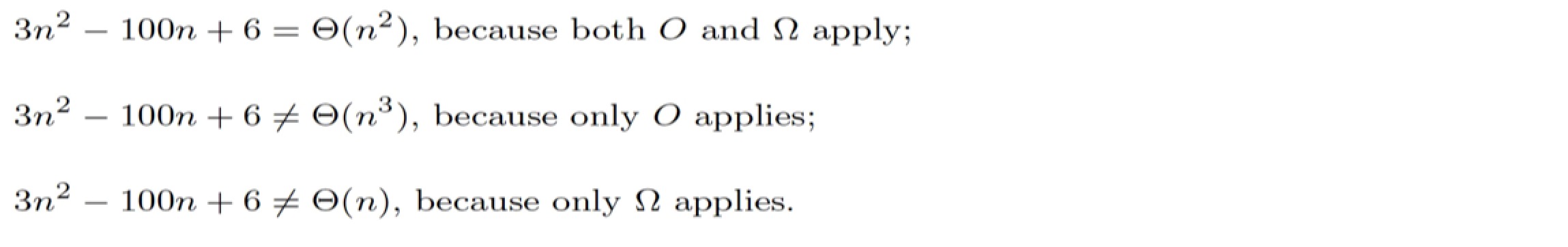

Example Big-Theta calculation

More example on Big(O)

f(n) = 3n + 8 <= 4n for all n0 = 8 and c=4 O(n) f(n) = n2 + 1 <= 2n2 for all n0 = 1 and c=2 O(n2 )

f(n) = n4 + 100n2 + 50 <= 2n4 for all n0 = 11 and c=2 O(n4)

f(n) = 2n3+ 22 <= 2n3 for all n0 = 1 and c=2 O(n3 )

f(n) = n <= n2 for all n0 = 1 and c=1 O(n )

f(n) = 410 < = 410 for all n0 = 1 and c=1 O(1)

There are no unique set of values for n0 and c in proving the asymptotic bounds. Lets Consider, 100n + 5 = O(n)

Sol 1 : 100n + 5 <= 100n + n = 101n , for all n >= 5 and c =101

Sol 2 : 100n + 5 <= 100n + 5n = 105n , for all n >= 1 and c =105

References

Algorithm Design Manual – Steven S. Skiena

Design and Analysis of Algorithm – Anany Levitin

Beginning Algorithm – Simon Harris, James Ross

Data Structure And Algorithm with javaScript – Michael McMillan

Data Structures and Algorithms Made Easy – Narasimha Karumanchi

The documentation was impressive and it helps understanding for beginners .Looking forward for such more content.It would be great if such blogs turn out into a verbal sessions.